Unit testing predictions

Gradient Boosting Trees (GPT) is a machine learning algorithm that can be used to make predictions based on data. It is not typically used for unit testing, which is a software testing technique in which individual units or components of a software application are tested in isolation from the rest of the application.

GPT claims that it’s possible to use to assist with unit testing in certain ways. For example, GPT could be used to generate test cases by analyzing the code and predicting the inputs and outputs that are likely to be most relevant for testing. Alternatively, GPT could be used to evaluate the output of test cases by making predictions based on the expected results and comparing them to the actual results. But, just because it’s written doesn’t make it so.

Overall, while GPT is not a traditional unit testing tool, it can be used in conjunction with other testing tools and techniques to help improve the efficiency and effectiveness of the testing process. However, it is important to understand the capabilities and limitations of GPT and to use it in a way that is appropriate for the needs of your project. Or in other words, You can try but you have no way to know if the answer GPT gives you are right or utter nonsense.

Lack of information

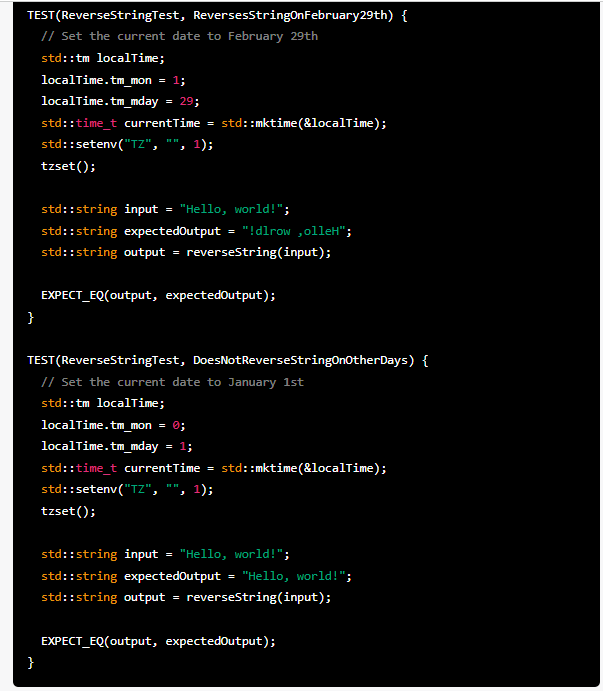

We asked GPT to write a unit test for us, guess what? He got it all wrong. While everything that comes out of his AI mouth sounds very convincing, The test he gave us makes sense but is actually wrong!

Do you see the mistake?

Try yourself and the answer will be below

Don’t count on AI’s

GPT is a powerful and widely used machine learning algorithm that can be effective in a variety of situations. However, like any machine learning algorithm, it has its own set of strengths and limitations, and it may not be the best choice for every problem or application.

Here are some potential reasons why a developer might choose not to use GPT:

- The problem being solved is not well suited to a decision tree-based approach. GPT is based on the idea of building an ensemble of decision trees, and may not be as effective for problems that require more complex models or that are better suited to a different type of algorithm.

- The dataset is too large or complex for GPT to be practical. GPT can be computationally intensive, and may not scale well to very large datasets or problems with many features.

- The model needs to be easily interpretable. GPT models can be difficult to interpret, as they are made up of many decision trees, each with its own set of rules and splits. This can make it difficult to understand how the model is making predictions and to identify potential issues or biases.

- There are other, more suitable algorithms available. Depending on the specific requirements of the problem being solved, there may be other machine learning algorithms that are more appropriate to use than GPT. For example, a linear model might be preferred if interpretability is a high priority, or a neural network might be a better choice if the problem requires a highly complex model.

Overall, while GPT can be a useful and effective machine learning algorithm in many situations, it is important for developers to carefully consider the specific needs and constraints of their problem and to choose the most appropriate algorithm for the task at hand.

ps. The answer:

Setting the system time and leaving it set in the past is a bad practice and will leave failing applications, emails sent in the wrong date and other issues: The correct way is to Fake the system time just for the test

[…] Unit testing with GBT (Shila Toledano) […]