There are twelve principles outlined as part of the Agile Manifesto. Near the bottom, you’ll find these two:

- “Continuous attention to technical excellence and good design enhances agility.”

- “Simplicity—the art of maximizing the amount of work not done—is essential.”

In many cases, they’re overlooked to the detriment of success. Ron Jeffries—you’ll find his name among the original signatories in the Agile Manifesto itself—has a lot to say on the topic. He put out a call for sources specifically covering the topic of “technical excellence.”

Do me a favor, please. I’m making a list of people, tools, resources, training, products, … that are about the /software development/ aspects of “Agile”.

Even if you think I already know it, please DM or tweet to me names and links of such things. I’ll open DMs.

—Ron Jeffries (@RonJeffries), July 16, 2018

Furthermore, on his website, he suggests that we should “abandon ‘Agile.’” But wait—he’s not suggesting we should abandon Agile after all, just the flavors of “Agile” that don’t enable the principles.

Let’s take this as a jumping-off point because he makes some crucial points in that post. Jeffries stresses three essential principles that I’ll paraphrase:

- Always be ready to ship.

- Keep the design clean at all times.

- What’s now and what’s next are the only things that matter.

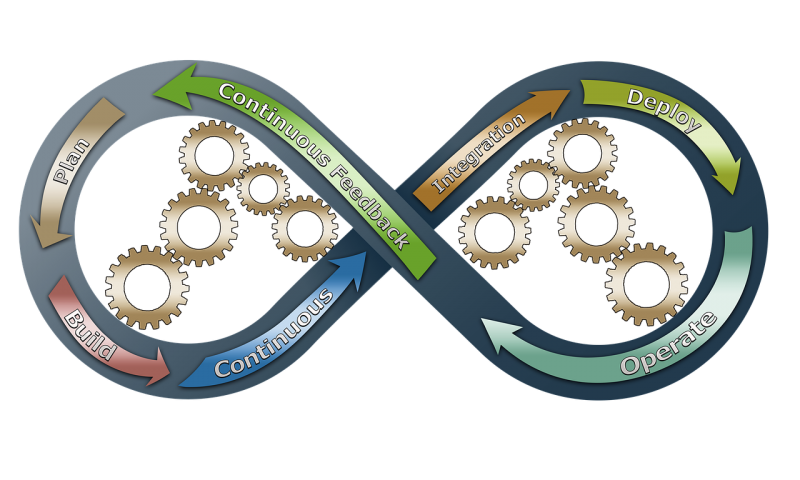

So, how do we as engineers accomplish these feats? That’s precisely what you’re going to get out of this post today. You’ll learn about seven core practices that are critical to succeeding in an Agile and DevOps environment.

1. Refactoring

Refactoring is one of the most important technical skills you can acquire. When we refactor our code, we’re not only reorganizing it, but also refining it. Refactoring makes the code easier to change, understand, and maintain.

Let me give you an example of a classic refactoring that removes duplication. We want to log two different types of messages to the same log while conforming to the format.

Here’s the original version:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

public class CallLogging { public void LogIncomingCall(string name, string id, string message) { using(var append = File.AppendText("calls.log")) { append.WriteLine($"INCOMING\t{name}\t{id}\t{message}"); } } public void LogOutgoingCall(string name, string id, string message) { using(var append = File.AppendText("calls.log")) { append.WriteLine($"OUTGOING\t{name}\t{id}\t{message}"); } } } |

We can combine almost every bit of the two methods in this CallLogging class. They only differ in the first part of the log message. We’ll use refactoring from Martin Fowler’s seminal work on the topic: Refactoring: Improving the Design of Existing Code.

Fowler calls this refactoring “Extract Method,” a term you may have seen in your IDE a time or two.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

public class CallLogging { public void LogIncomingCall(string name, string id, string message) { string direction = "INCOMING"; LogCall(name, id, message, direction); } public void LogOutgoingCall(string name, string id, string message) { string direction = "OUTGOING"; LogCall(name, id, message, direction); } private static void LogCall(string name, string id, string message, string direction) { using (var append = File.AppendText("calls.log")) { append.WriteLine($"{direction}\t{name}\t{id}\t{message}"); } } } |

With this type of refactoring, we can create a new private method that’s reusable. It takes the direction variable and handles the details of how to log. This code can be refactored further; however, there’s something else you need before you can go on refactoring.

2. Unit Testing

Legacy code often becomes a legacy because you can’t refactor it. Before you can refactor, you need to have unit tests in place. These tests should be run after every step in the refactoring to make sure you don’t introduce a defect.

Unit testing is an essential part of the process. Mocking and faking are an essential part of Unit testing. If you haven’t written unit tests or you’re working on legacy code, it can be tough to figure out where to start. Typemock’s Isolator makes it easier. It allows a direct access to private methods and changing their behavior, faking instances\future instances of object and verification of public\non-public calls and much more.

If you’re curious, here’s a sample of a unit test in C# using xUnit:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

public class CallLogTests { [Fact] public void LogsIncomingWithSpecifiedFormat() { // Arrange File.Delete("calls.log"); CallLog log = new CallLog(); string name = "Phil"; string id = "M1234"; string message = "Let's test that code!"; // Act log.LogIncomingCall(name, id, message); // Assert var lastLine = File.ReadLines("calls.log").LastOrDefault(); Assert.Equal("INCOMING\tPhil\tM1234\tLet's test that code!", lastLine); } } |

This is just a single unit test to test one condition. Usually, we want to test multiple conditions. And that’s what we’ll get into next. (I know. I can’t wait!)

3. Driving Tests With Data

Alright, so I’m a real geek when it comes to this topic. And there’s a reason I use xUnit for unit testing in .NET: you can cleanly drive tests with data.

When you have tests driven by data, you write less code and test more conditions. Let’s continue our logging example so you can see what I mean.

I’ll rewrite the first test so that it takes in parameters. There are a few ways to pass data to the test, but I’ll use “InlineData” to keep it simple. I also have to change the attribute from “Fact” to “Theory” so xUnit will know it’s meant to be driven by data. Now it looks like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

[Theory] [InlineData("Phil", "M123", "Foo", true, "INCOMING\tPhil\tM123\tFoo")] [InlineData("Phil", "M1235", "Bar", false, "OUTGOING\tPhil\tM1235\tBar")] public void LogsWithExpectedFormat( string name, string id, string message, bool isIncoming, string expected) { // Arrange File.Delete("calls.log"); CallLog log = new CallLog(); // Act if(isIncoming) { log.LogIncomingCall(name, id, message); } else { log.LogOutgoingCall(name, id, message); } // Assert var lastLine = File.ReadLines("calls.log").LastOrDefault(); Assert.Equal(expected, lastLine); } |

I’ve changed the code to handle multiple cases, but the test I wrote that counts log entries is failing now. Why? It’s reading 11 entries instead of two. Here’s the output for the failure:

|

1 2 3 4 5 6 |

[xUnit.net 00:00:01.77] agileways.CallLogTests.LogsOnSeparateLines [FAIL] Failed agileways.CallLogTests.LogsOnSeparateLines Error Message: Assert.Equal() Failure Expected: 2 Actual: 11 |

The problem is in the test code itself. I’ll leave that as an exercise for you to figure it out. Have fun!

The important thing here is that you’re familiar with data-driven tests because the next practice builds on this concept.

4. Automated Acceptance Testing

Acceptance Test Driven Development (ATDD) is the holy grail of agility. It’s what brings the pieces together and makes your Agile practices whole. When properly practiced, ATDD leads to true collaboration between engineering and the business.

There are different ways to implement ATDD, but the most effective involves three parties:

- A business sponsor

- A quality-minded person

- The implementer

First, these three teammates come together and define the acceptance criteria for the feature. It’s an iterative process: Make the example inputs and expected outputs for a test. Implement. Validate. Iterate.

The Cucumber language is a typical aid in ATDD since it gives collaborators a common ubiquitous language for writing specifications. Here’s an example of a single criterion written in Cucumber:

|

1 2 3 4 5 6 7 8 9 10 11 |

Feature: Call Logging The service desk would like to log calls so they can review periodically. There are learning opportunities in many of the calls and they need a way to reference recordings. Scenario: Incoming call Given 'Martha' calls the service desk And the call is tagged with the id 'M1234' And the message entered for the call is 'Martha needs to reboot' When the call is logged Then the log should have the entry 'INCOMING\tMartha\tM1234\tMartha needs to reboot' |

As you can see, this is basically specification by example. Once written, this file is parsed and sent through a framework that feeds the data to your unit tests.

The tools aren’t as important as the collaboration. However, tools that reduce waste are highly encouraged.

5. Reducing Waste

Waste happens in the normal course of business. You may have heard of the concept of “eliminating waste.” Waste elimination is a lofty goal, and I commend anyone who can truly eliminate waste. But, let’s take a look at waste from another perspective.

As in all things, waste should be looked at from a cost-benefit perspective. Applying the Pareto Principle (or the 80/20 rule) to waste, eliminating 20% of the waste will increase efficiency by 80%. The trick is finding which 20% to eliminate.

Let’s apply this to unit testing. Say you’re writing unit tests for everything, which is awesome! Now let’s say those tests take about three minutes to run. You run them after every change like a true champ. And now let’s assume you’re not an army of one, but an army of 10. Ten developers running that unit test suite multiple times per day. Let’s do some quick math and get a rough estimate of the wasted cost:

- 10 developers

- Five hours/week of developers running tests

- $100/developer hour (estimated total hourly cost of staffed developer)

- 10 × 5 × $100 = $5,000

In this example, running the entire test suite can cost up to $5,000 per week! There’s a lot of waste in there too. Does the whole test suite really need to run every time? No. You only need to run a subset most of the time. Luckily, there’s a tool called SmartRunner that automatically reduces the waste.

Increasing the speed for each full build test run also shortens the feedback loop for tests that fail. Developers get the feedback much quicker before they can pile more code on top of an error.

What other waste can you reduce? What are the cost-benefit ratios of doing so? To find out, you’d have to measure and crunch some numbers in your own environment. In fact, measurement is our next topic. And it’s a touchy subject at that.

6. Measuring Trajectory

There are two common ways to measure trajectory in an Agile world: Sprint Velocity and Lead Time. Timeboxed methodologies use velocity and adjust their iteration commitment based on past performance. But, as we know from that little disclaimer in our 401k funds, past performance is no guarantee of future returns. Another way to look at the trajectory is from the longer-term perspective, using averages over a longer period.

Kanban is a process of “pulling” work through the system. It was designed to reduce wasteful inventory in manufacturing. Some of its practices and principles are useful in Agile software development.

One key principle in Kanban is a Work in Progress (WIP) limit. A WIP allows us to focus. Another benefit is we can measure how long it takes to produce a feature. A Cumulative Flow Diagram (CFD) is the tool du jour for measuring trajectory in Kanban.

As the saying goes, “measure what you treasure.” Once you’ve measured, it’s time to talk and plan for improvement.

7. Continuously Improving

Measurements are just one piece of the continuous improvement puzzle. What’s more, they can backfire when game theory kicks in. Ethics are also important when it comes to measurement-driven improvement. Other extremely important aspects are trust, communications, retrospectives, and empowerment.

In Kanban, there’s an idea that those doing the work are often the ones with the best ideas about how to improve their work. Retrospectives are used to discuss just that. It’s a chance to regularly talk about what isn’t working and what’s working well. It’s important to keep retrospectives focused and to hold them regularly.

Retrospectives are one of the most important tools for going Agile. But let’s be sure to remember that it’s not just about tools. It’s about the culture—which means people. Change is a process, and it takes the right environment to succeed.

Review

Agile and DevOps are about delivery and change, so the approach you take for the implementation is crucial and cannot be taken lightly. Likewise, half-measures will not lead to partial success, they can sabotage the adoption and cause an organization to reconsider the value of it all. These seven practices cover the most often overlooked aspects of an Agile transformation. Success requires discipline, communication, respect, and, let’s not forget, technical excellence.., so it is highly recommended to embark on the transformation process with support from someone who has experience and led similar transformation processes in the past.